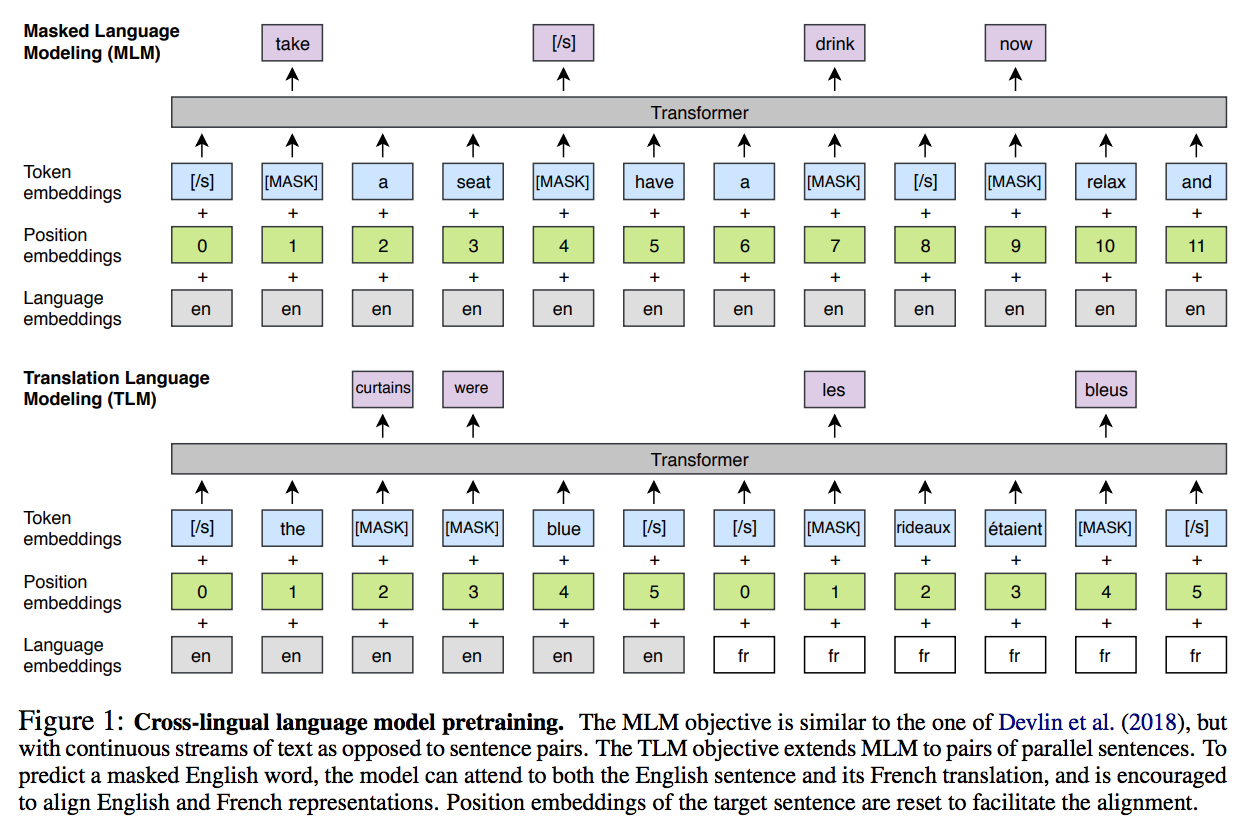

![Model structure of the label-masked language model. [N-MASK] is a mask... | Download Scientific Diagram Model structure of the label-masked language model. [N-MASK] is a mask... | Download Scientific Diagram](https://www.researchgate.net/publication/337187647/figure/fig2/AS:824406486040589@1573565231490/Model-structure-of-the-label-masked-language-model-N-MASK-is-a-mask-token-containing.png)

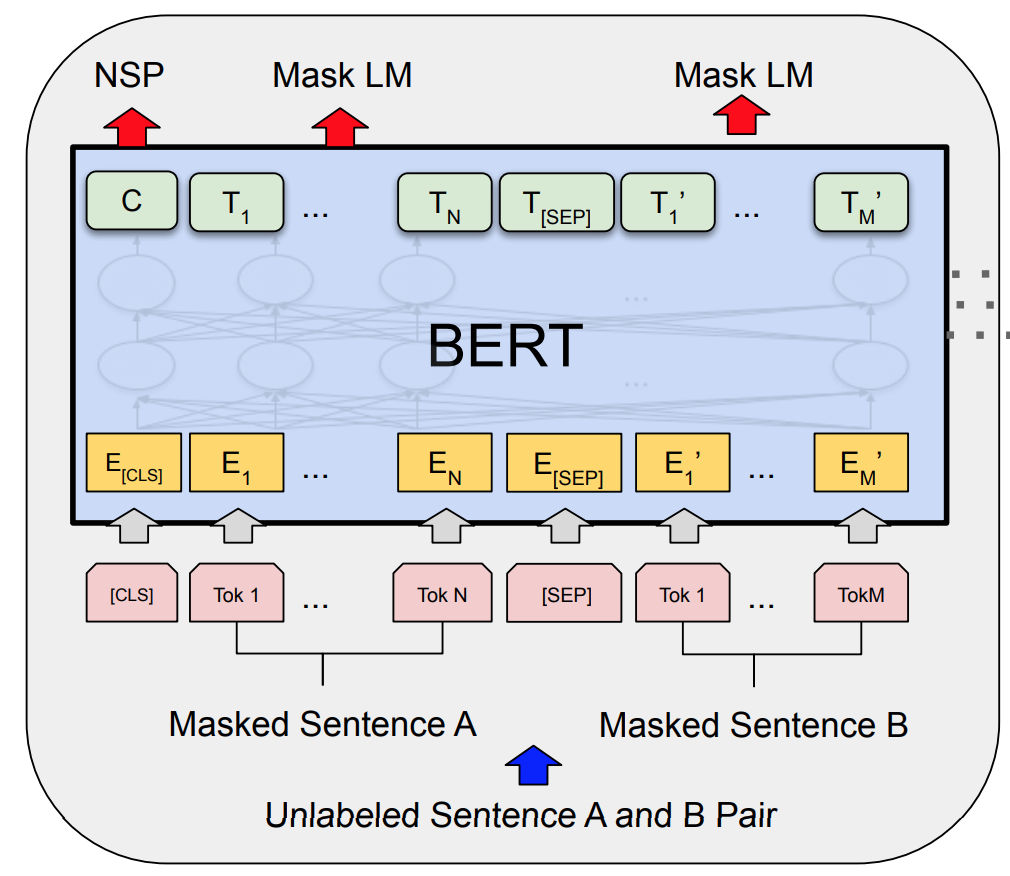

Model structure of the label-masked language model. [N-MASK] is a mask... | Download Scientific Diagram

Guillaume Desagulier on Twitter: "Using BERT-based masked language modeling to 'predict' the most likely adjectives and verbs that enter the multiple-slot construction <it BE ADJ to V-inf that>. https://t.co/lnGRKON0BS" / Twitter

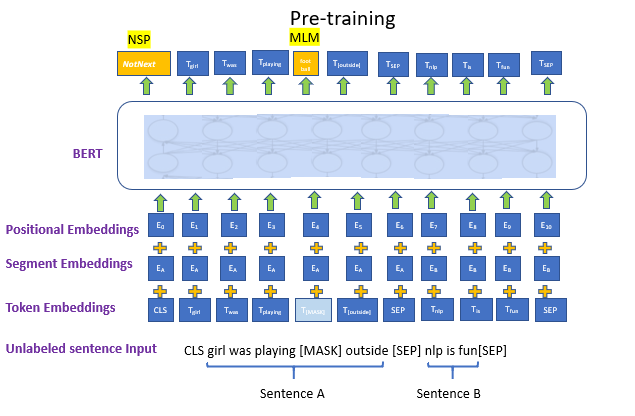

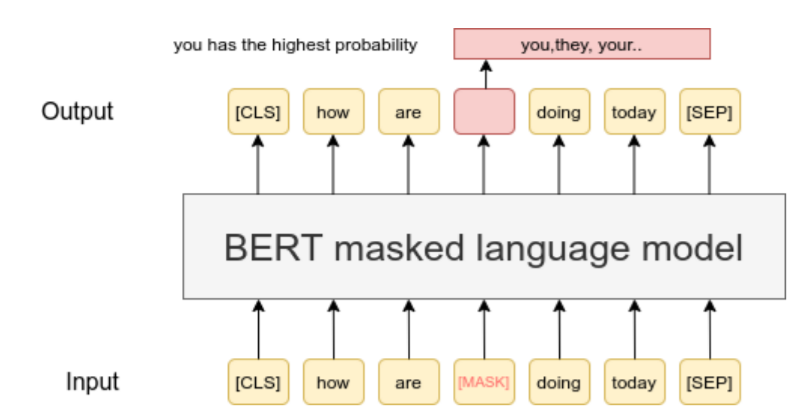

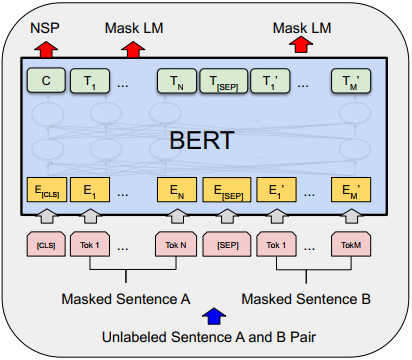

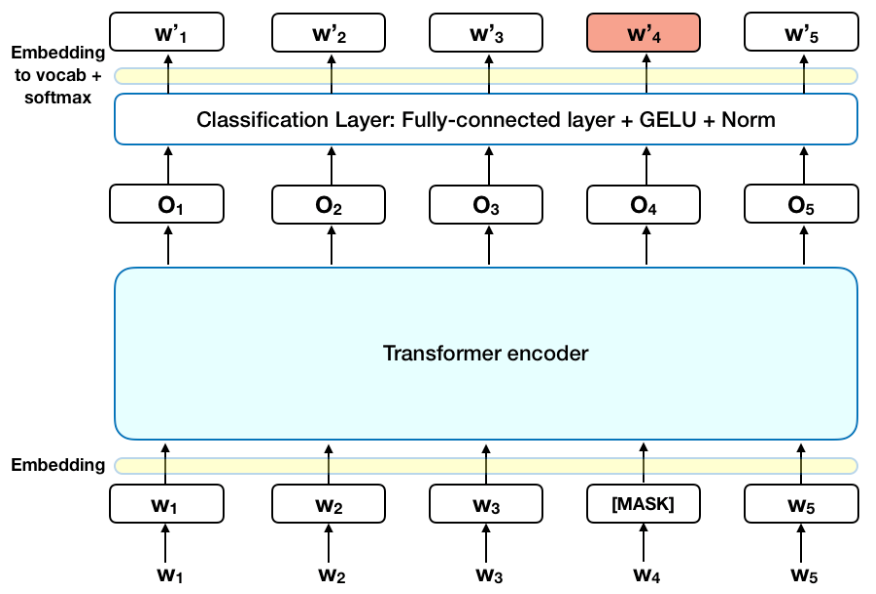

Understanding Masked Language Models (MLM) and Causal Language Models (CLM) in NLP | by Prakhar Mishra | Towards Data Science

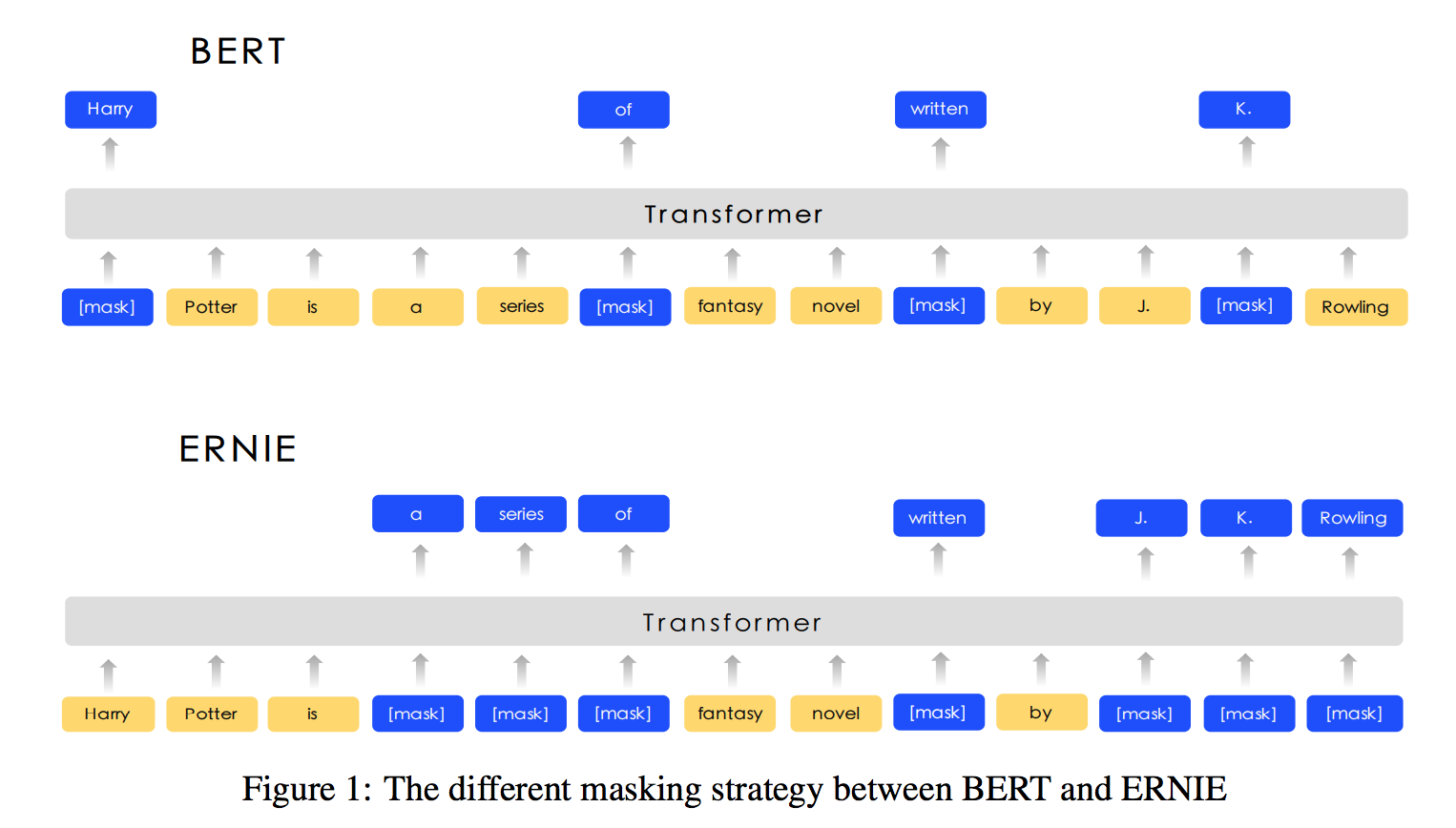

![PDF] What the [MASK]? Making Sense of Language-Specific BERT Models | Semantic Scholar PDF] What the [MASK]? Making Sense of Language-Specific BERT Models | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6551f742b825561d26242ca8a646ba0e33fb109f/3-Figure1-1.png)